With the rapid rise of AI tools like ChatGPT and Bard, many users are eager to harness their power for everything from drafting emails to generating creative content. However, a critical question often arises: How secure is the information we feed into these platforms? Are there risks to confidentiality and data privacy? Let’s dive into this important topic and provide clear guidance on how to protect your sensitive data when using AI language models.

The Crucial Rule: Never Share Confidential Information

The simplest and most important rule to remember is: do not input confidential or secure information into public AI platforms. This is not just a cautionary statement — it’s a security imperative. For instance, ChatGPT experienced a major breach where over 100,000 accounts were compromised, exposing histories and potentially sensitive data.

Even if these platforms invest heavily in security, you are still sharing your information with a system you do not control. Once your data leaves your hands, there is always some level of risk it could be accessed by third parties or misused—even unintentionally.

Why Avoiding Sensitive Data Matters

When you use ChatGPT or similar services, your input is processed on servers managed by the service provider. This means:

Your data could be stored or logged.

Employees or automated systems might access it.

It could be vulnerable to hackers or data leaks.

You lose control over who sees the information.

These risks make it critical to refrain from sharing anything that could harm your personal, professional, or organizational privacy if exposed.

How to Safely Use AI for Sensitive Data: Local Models

If your work absolutely requires AI assistance with sensitive or protected information, the safest approach is to run a large language model locally on your own device. Open-source projects like GPT4All (gpt4all.io) provide tools to download AI models that run entirely on your computer without sending data to external servers.

Here’s how this works:

You install the application on your Windows, Linux, or Mac device.

Download an AI model of your choice (e.g., LLaMA, Vicuna).

Choose to disable data sharing.

The AI runs locally, meaning your inputs and the generated responses never leave your computer.

This method offers maximum privacy, provided your device is secure. Unlike cloud-based AI services, no third-party can access your queries or outputs.

Balancing Convenience and Security for Everyday Use

For less sensitive tasks—like brainstorming blog post outlines or improving the tone of a generic email—using public AI models like ChatGPT or Bard is generally safe. In these cases, you are not revealing personal or proprietary information that could cause harm if disclosed.

Use this simple litmus test to decide what to input:

Would you be comfortable sending the content in an email to a complete stranger?

Would your company approve sharing this information publicly without restrictions?

If the answer is no, avoid entering that data into cloud-based AI services.

Practical Examples

Safe to input: A memo politely asking colleagues to avoid microwaving fish in the communal kitchen.

Not safe to input: Confidential sales figures, private client information, or unreleased product plans.

Final Thoughts

AI language models are powerful tools, but they come with inherent risks related to data privacy and confidentiality. The key takeaway is to always treat AI interfaces like you would any shared communication channel:

Never share sensitive or confidential info on third-party platforms.

Use local AI models if confidentiality is paramount.

Apply common sense and privacy-first thinking to all AI interactions.

By following these guidelines, you can enjoy the benefits of AI technology without compromising your security.

If you found this guide helpful and want to stay informed about technology safety and best practices, be sure to subscribe to channels or newsletters that focus on digital security and AI literacy. Protect your data—your privacy depends on it!

News

Unraveling the Wildest Moon Landing Myths: A Dive into Conspiracy Theories That Defy Logic

On July 20th, 1969, humanity celebrated a monumental achievement: Apollo 11’s successful landing on the moon. Neil Armstrong’s famous words,…

Unveiling Consumer Trends: Super Bowl Betting Patterns, Peloton’s Leadership Shift, and Amazon’s Foray into Telehealth Services

As consumer interests evolve, several notable trends are emerging in sports betting, corporate leadership, and healthcare innovation. Recent insights reveal…

Unlocking the Mind’s Eye: Exploring the Science of Remote Viewing with Dr. Simeon Hein

Remote viewing, a term that evokes images of psychic spies and mysterious government projects, has recently gained renewed attention thanks…

Exploring the Shadows: A Journey through the World’s Most Classified Government Facilities

Across the globe, certain government facilities remain shrouded in secrecy, sparking intrigue, speculation, and sometimes conspiracy theories. These heavily guarded…

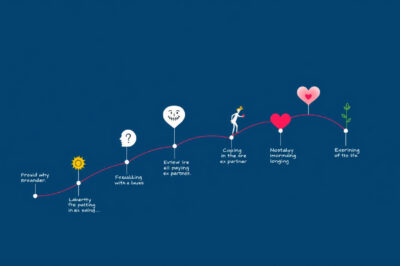

The Psychological Timeline: When Do Exes Begin to Feel a Sense of Longing?

Breaking up is one of the most emotionally challenging experiences in life. Many people wonder, “When will my ex start…

Unveiling the Unseen: 13 Intriguing Indicators You May Have Been Abducted by Aliens

Alien abduction is a topic that has fascinated and mystified people for decades. While some dismiss such encounters as mere…

End of content

No more pages to load